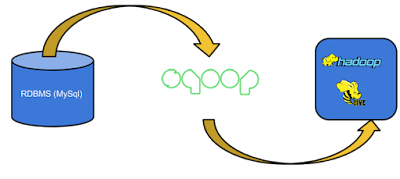

Sqoop Script for Importing Data RDBMS to HDFS and RDBMS to HIVE

Sqoop-Import

Sqoop import command imports a table from an RDBMS to HDFS. Each record from a table is considered as a separate record in HDFS. Records can be stored as text files, or in binary representation as Avro or Sequence Files.

Importing a RDBMS to HDFS

Syntax:

$ sqoop import --connect --table --username --password --target-dir -m1

--connect Takes JDBC url and connects to database (jdbc:mysql://localhost:3306/test )

--table Source table name to be imported (sqooptest )

--username Username to connect to database (root )

--password Password of the connecting user(12345)

--target-dir Imports data to the specified directory (/output )

--m1

For Eg:

--m1

For Eg:

|

| RDBMS TO HDFS |

Importing a RDBMS to HIVE

Syntax:

$ sqoop import --connect --table --username --password --hive-import --hive-table -m1

Specifying --hive-import, Sqoop imports data into Hive table rather than HDFS directory.

--connect Takes JDBC url and connects to database (jdbc:mysql://localhost:3306/test )

--table Source table name to be imported (sqooptest )

--username Username to connect to database (root )

--password Password of the connecting user(12345)

--hive Import tables into Hive (hive-import)

--hive-table Sets the Hive table name to import (sqoophivetest )

--hive Import tables into Hive (hive-import)

--hive-table Sets the Hive table name to import (sqoophivetest )

No comments:

Post a Comment